As artificial intelligence (AI) continues to accelerate, the question of how—and who—should govern it grows more urgent. Recent developments in the U.S. Senate highlight this tension. A proposed AI moratorium that would have prohibited individual states from passing their own AI laws for ten years was quietly removed from a broader regulatory package following intense public backlash. This reversal highlights a core dilemma: While national regulation is essential, it often struggles to match the pace of change, and to curb emerging harms. What happens in the meantime?

At ThoughtsWin Systems, we believe the answer lies in empowering local and provincial governments, institutions, and organizations to take meaningful steps now—providing breathing room to enhance national consensus. That means building robust, values-driven governance practices that balance innovation with accountability.

This isn’t about replacing federal oversight. It’s about building the kind of localized frameworks that not only respond to current needs, but also help shape smarter, more inclusive national policy over time.

Most experts agree that federal policy will play a critical role in standardizing AI safety, transparency, and accountability. Whether in Canada, the United States, or elsewhere, national frameworks can enforce baseline expectations and reduce regulatory fragmentation.

But there’s a catch: National legislation takes time. Provision for debate, committee revisions, and political concessions often leave policies lagging behind technological developments. Worse still, the lack of clarity at the country level, combined with rigid moratoriums such as the proposed U.S. state-level ban, risk stalling experimentation and meaningful progress at the local level, where the impacts of AI are already being felt.

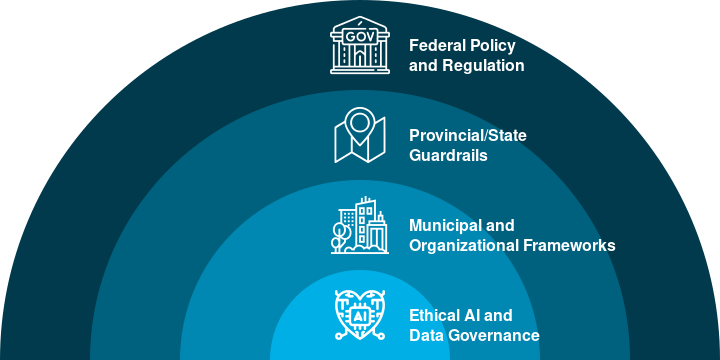

AI governance is most effective when structured across complementary layers—each supporting oversight, safety, and innovation from the ground up.

Rather than being seen as a risk to national cohesion, regional efforts should be treated as test beds for ethical AI. Local policies can reveal what works and what doesn’t—generating valuable insights for broader regulation.

These experiments aren’t just useful; they’re vital.

Local Al governance sandboxes offer a safe, adaptable space for testing, improving, and scaling responsible Al practices.

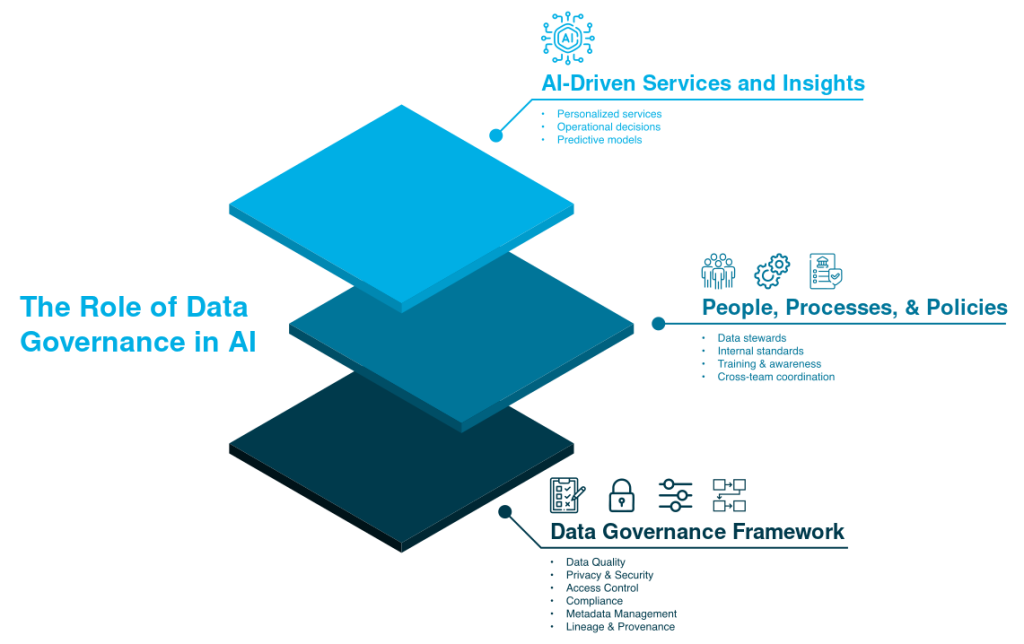

Strong AI governance begins with strong data governance. Without clarity around how data is collected, used, protected, and shared, even the most sophisticated AI system can produce harmful or biased outcomes. That’s why we encourage clients to treat data governance not as a compliance checkbox, but as an operational foundation.

Effective governance frameworks help organizations:

Critically, this kind of governance isn’t just about technology—it’s about people, culture and process. Governance frameworks must reflect the values of the communities they serve. That means grounding decision-making in transparency, equity, and meaningful stakeholder engagement.

Strong data governance enables Al to operate with accountability, consistency, and fairness.

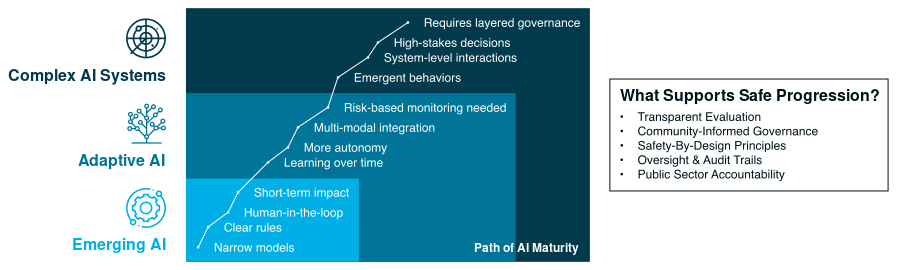

In practice, long-term accountability can be supported by integrating methods such as:

Involving communities in shaping how AI is used, and making sure decisions are transparent, helps build trust and reduce risk as these technologies become part of everyday life.

As Al systems become more advanced, governance strategies must grow with them to ensure safe, responsible deployment.

At ThoughtsWin, we help local and regional organizations build AI governance models that are actionable, adaptable, and aligned to real-world needs. Our work spans public and private sectors—from municipalities seeking intelligent infrastructure to agencies digitizing legacy decision-making processes.

By embedding governance into operations—not just strategy documents—we help clients future-proof their AI investments and build trust with stakeholders.

The current pause on a U.S. AI state moratorium is encouraging, but the debate is far from over, and other countries are watching carefully. Whether or not national governments, including Canada, opt to impose top-down controls, the reality is this: AI is already shaping decisions, policies, and services at the local level. And local leaders must act accordingly.

ThoughtsWin believes local experimentation, guided by principled governance, can coexist with and inform national AI policy. By supporting clients in building practical, value-aligned governance today, we’re helping lay the groundwork for smarter, safer AI tomorrow.