The advent of large language models (LLMs) has marked a significant turning point in the field of artificial intelligence. Since their introduction in 2017, these models have revolutionized the landscape of AI, with the launch of ChatGPT in 2022 bringing LLMs into the mainstream. This launch not only highlighted the immense capabilities of LLMs but also underscored their potential to be the most transformative technology since the invention of the internet. From redefining how internet search works to image creation to various forms of writing, LLMs are reshaping both everyday activities and critical business functions, particularly within white-collar professions.

Despite the apparent dominance of OpenAI’s ChatGPT in 2022–23, the competitive landscape of LLMs has since evolved dramatically. 2023 alone saw the introduction of hundreds new LLMs, with major players Meta, Amazon, Google, Microsoft, and Anthropic entering the fray. These developments have not only narrowed the performance gaps among different models but have also driven down inference costs significantly (by more than half from our observation).

Yet, the widespread enterprise adoption of LLM-based applications is still in its infancy. While CEOs across various industries have been quick to announce AI strategies, the practical deployment of these models faces significant hurdles. Challenges such as inaccuracies/misinformation, biases, and data security concerns, alongside the need for extensive data preprocessing, are prominent. Furthermore, use cases that perform well in controlled environments often struggle when scaled to production levels. Despite these obstacles, the potential for profit improvements and operational efficiency gains continues to drive interest and investment in LLM technologies across the corporate world.

Enterprises will increasingly look to leverage multi-modal models, agentic AI systems, and new model architectures as they begin to deploy LLM-based applications at scale. We will explore some of their transformative power in various sectors below:

The recent introduction of multi-modal AI, which processes text, images, audio, and video within a single model, marks a major milestone in AI development. OpenAI’s GPT-4 model exemplifies this leap, offering end-to-end processing capabilities that have been quickly matched by Google and Anthropic, bringing physical and digital worlds closer.

Multi-modal models enhance capabilities similar to Siri or Alexa, but with the added ability to interpret contextual clues from both audio and visual data. This means they can understand not just the words spoken but also the underlying emotions, tones, and facial expressions, leading to more nuanced and effective interactions.

The impact of multi-modal AI spans several industries. In healthcare, they offer transformative potential, as demonstrated by ThoughtsWin Systems’ partnership with mlHealth 360. Together, we are pioneering the generation of radiology reports for 3D medical imaging, specifically targeting CT volumes. In customer service, multi-modal models enable chatbots to handle voice interactions more naturally and accurately. Other sectors, such as robotics, e-commerce, energy, and manufacturing, which frequently deal with varied data types, are also set to benefit immensely. The innovation lab at ThoughtsWin Systems has been instrumental in driving these advancements, running initiatives that push the boundaries of what AI can achieve.

Since multi-modal models can analyze and produce content across multiple formats, this capability opens up new possibilities for content repurposing, allowing marketing teams to quickly transform existing content into new formats or campaigns. Additionally, these models facilitate multilingual adaptation, enabling the conversion of content into multiple languages for global distribution. Enhanced training is another significant application, where multi-modal models can create immersive and interactive training scenarios for employee onboarding and skill development.

While multi-modal AI models have the ability to handle diverse types of input and output, integrating them with compound AI systems, or multi-agent systems, takes automation to the next level by enabling the execution of complex tasks and processes.

Multi-agent systems work by breaking down a large goal into a series of manageable steps, executing each step in sequence, and passing the output from one step to the next to achieve the final outcome. These systems can independently plan and take action based on their evaluation of a situation, meaning they have agency. An agent, or several agents, can decide to engage various interacting systems and may even consult humans when necessary.

Unlike single-pass AI models, agents approach tasks similarly to humans tackling complex objectives. They collect information, develop initial solutions, self-assess to identify weaknesses, and revise their approaches for better results. This capability transforms AI from a passive tool into an active collaborator, assuming responsibility for entire projects.

Research has shown the substantial benefits of collaborative agentic systems. For example, Microsoft’s AutoGen project highlighted that multi-agent systems can significantly outperform individual agents in complex scenarios.

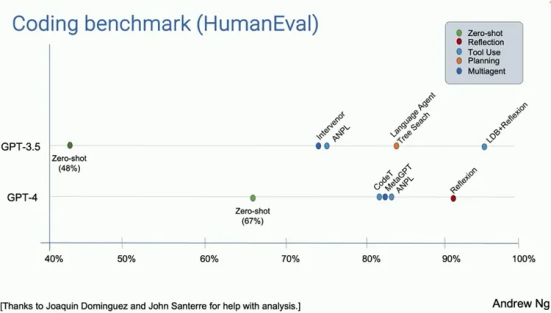

Dr. Andrew Ng demonstrated a compelling example involving coding benchmarks. Using the HumanEval Benchmark, which tests coding abilities, he compared the performance of GPT-3.5 and GPT-4. While GPT-4 significantly outperformed GPT-3.5 in a zero-shot prompting scenario, GPT-3.5 wrapped in an agentic workflow outperformed GPT-4. This illustrates the remarkable efficacy of agentic workflows, even with less advanced models.

As AI takes on more responsibilities, the design of agentic systems and their interfaces will need substantial innovation. Recent work, like the introduction of SWE-agent by John Yang and colleagues, shows that custom-built interfaces for agents are far more effective than traditional GUIs designed for human use. Optimizing digital interfaces for AI interactions will be crucial for future success, providing a competitive edge to early adopters.

However, deploying these agentic systems in production environments presents challenges. Agents typically rely on a series of LLM calls to determine their next actions, and the reliability of these calls is critical for task success. For example, the best performing model has a 90% accuracy rate in function-calling. Accuracy will drop to 59% if it needs to complete tasks requiring 5 consecutive function calls. Ensuring that agents can execute long-term tasks with real-world data remains a significant challenge, as maintaining and recalling relevant information over extended periods is difficult for current LLM-powered agents.

Future advancements will need to focus on grounding agents with accurate, up-to-date real-world data. As multi-agent systems continue to develop, their ability to autonomously manage complex tasks will transform various sectors, driving unprecedented levels of innovation and efficiency. For further exploration on this topic, refer to the insightful blog by the Berkeley AI Research (BAIR) Lab here.

As the AI landscape continues to evolve, the focus on scaling and improving transformer-based models remains strong, driven by significant startup activity and venture capital interest. Despite their impressive capabilities, transformers are not universally optimal. Emerging model architectures hold promise for being equally or more effective for specialized tasks, offering benefits such as reduced computational demands, lower latency, and greater control. Below are some emerging architectures that are showing promise:

State-Space Models: State-space models are emerging as a promising alternative to traditional transformer models, particularly for processing data-intensive items like audio and video. Unlike transformers that rely heavily on attention mechanisms, state-space models use a system of equations to describe the evolution of hidden states over time, effectively handling long-term dependencies. For instance, AI21’s Jamba architecture demonstrates the capability of state-space models in applications such as speech recognition, financial forecasting, and anomaly detection, showcasing their robustness and efficiency in handling complex, sequential data.

RWKV Models: RWKV models adopt a hybrid approach by combining the parallelizable training capabilities of transformers with the linear scaling in memory and compute efficiency of recurrent neural networks (RNNs). This integration introduces a recurrent mechanism for updating weight-key-value representations, addressing the inefficiencies of transformers in handling long sequences. As a result, RWKV models achieve comparable performance to transformers on language modeling tasks while being significantly more efficient during inference, making them an attractive option for tasks involving long-context dependencies.

Large Graphical Models: Large graphical models, particularly for tasks involving structured information and complex relationships between variables, are being developed by companies like Ikigai . These models use graph-based systems that visually represent relationships between different variables. Graphical models, including Bayesian networks and Markov random fields, utilize nodes to represent variables and edges to show probabilistic dependencies, capturing intricate data patterns. These models excel in handling time-series data, making them particularly relevant for business forecasting tasks such as demand, inventory, costs, and labor requirements.

As we continue to develop these advanced AI models, the availability of high-quality data for training is becoming a bottleneck. In the near future, we will likely need to rely increasingly on high-quality synthetic data to support many use cases, ensuring that AI models can continue to learn and improve effectively.

We are at a pivotal moment in the history of AI, where every layer of the AI stack is rapidly advancing. Despite the uncertainty this fast-paced development brings, this flywheel also offers generational opportunities for innovation. Those who address major and persistent challenges could create solutions so innovative they may appear nearly miraculous.